Announcing EventHubs ReIngest

When building a Kappa Architecture replaying historic events is an important property of the system. EventHub and IoTHub support EventHub Capture a way to automatically archive all incoming messages on Azure Blob or Azure Data Lake Store, this takes care of the archiving part.

To replay those messages back onto an EventHub (preferably a different one!) I created nathan-gs/eventhubs-reingest, a Spark based application that reads the Avro messages, sorts, repartitions (by random chance) and writes them as fast as possible to EventHub.

Other sources

Not only EventHub Capture Avro messages are supported, any Spark SQL or Hive datasource. Just specify a query as part of the configuration. Take a look at the

README for more information.

Performance

On a:

- 3 worker node HDI cluster

- a target EventHub

- 12 partitions

- 12 throughput units

- 5.6gb of capture files, with some small and some large events:

- 1,592 blobs

- 5,616,207,929 bytes

We manage to process the data in 15 minutes.

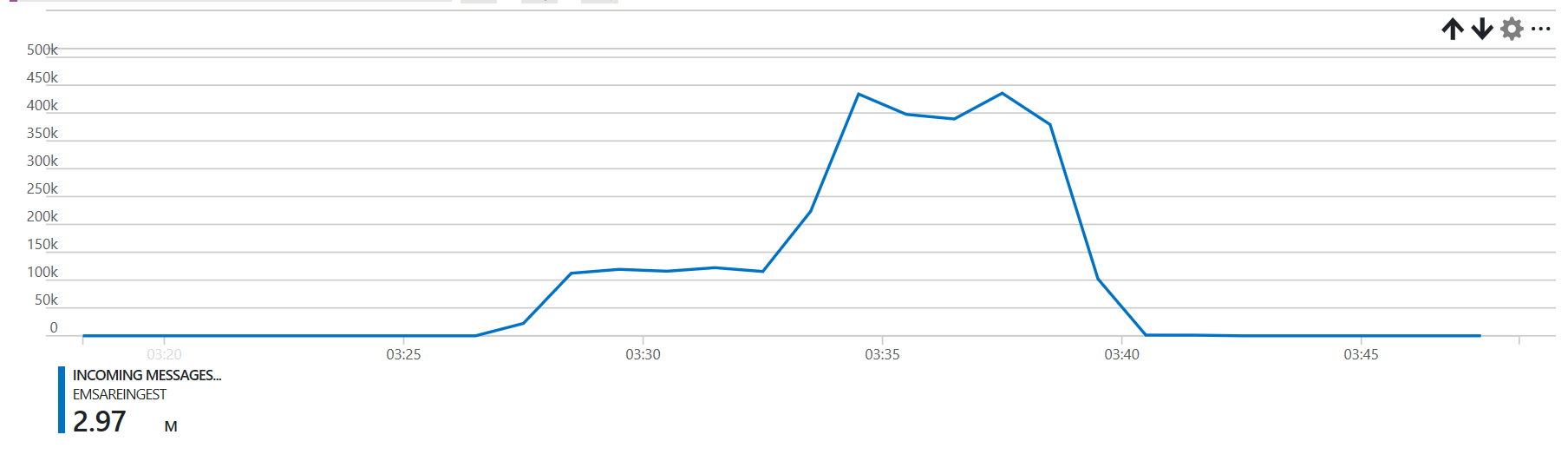

Throughput in mb

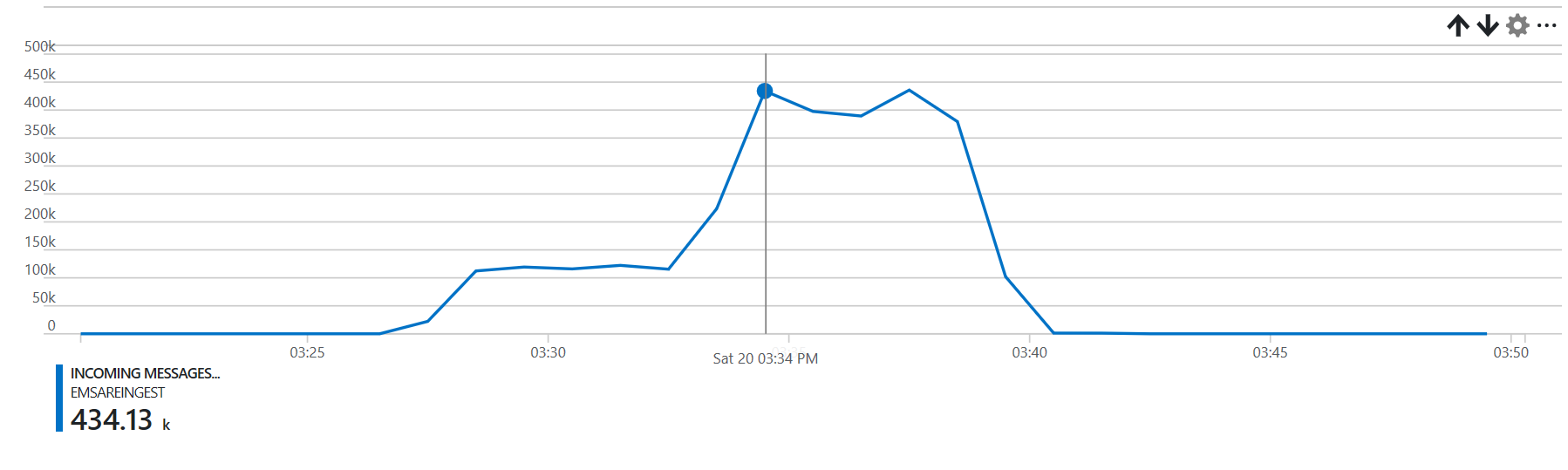

Throughput in msg/s

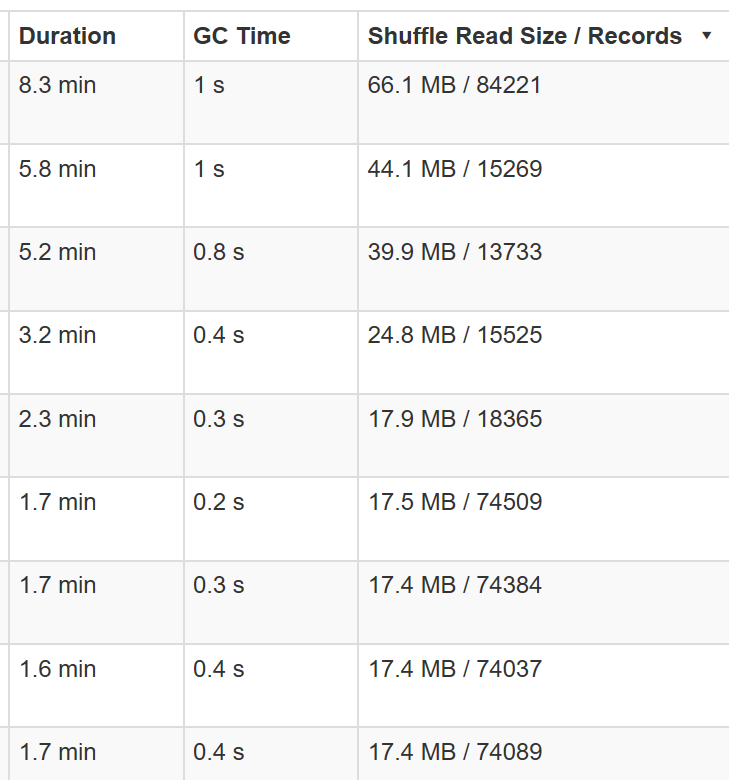

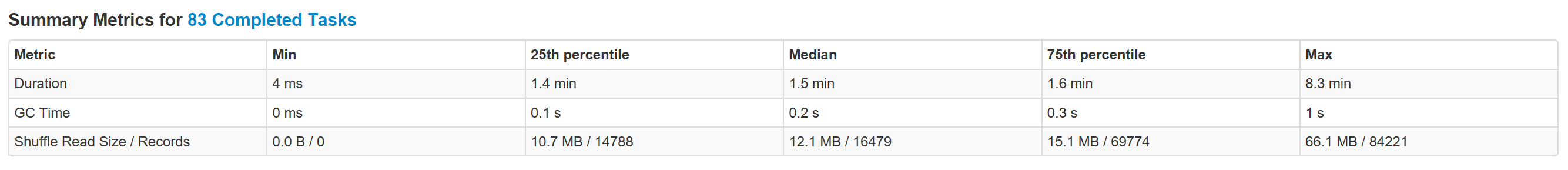

Distribution of Spark tasks

Do notice that the task time is highly correlated with the input size.