The Pizza Model for AI

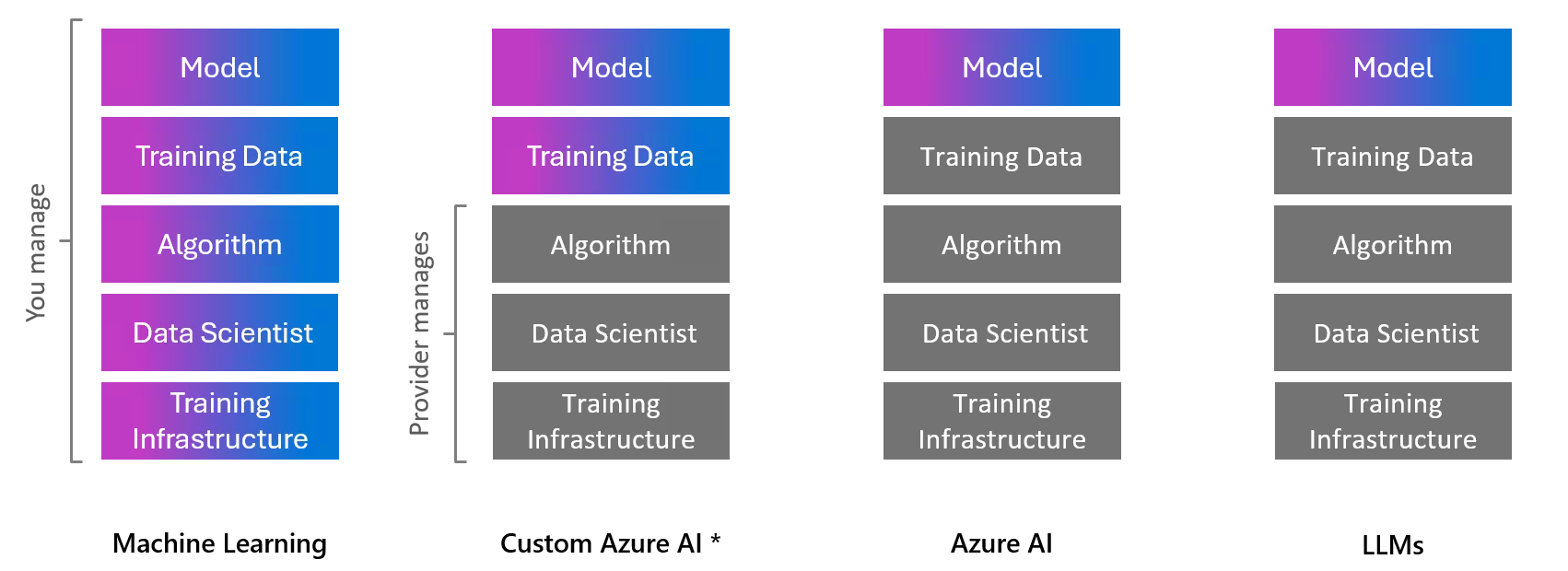

The Pizza Model for AI takes inspiration from the world of cloud computing, where services are often categorized into three main models: Infrastructure as a Service (IaaS), Platform as a Service (PaaS), and Software as a Service (SaaS). Drawing inspiration from these, the Pizza Model for AI emerges as a new paradigm, offering a layered approach to AI services, or what we might call “Models as a Service” (MaaS).

The Pizza Model for AI

- Training Infrastructure: The foundation of our pizza is the training infrastructure. This layer comprises the hardware and software that drive AI model training, including GPUs, TPUs, and distributed computing resources.

- Data Scientist: The next layer is the data scientist. This role involves crafting the recipe for our AI pizza, deciding which ingredients (features) will make the cut, and how to season (tune) the model to perfection.

- Algorithm: The algorithm forms the sauce of our pizza, spreading across the data to bring out the flavors. It’s the method by which the model learns from data, whether it be supervised, unsupervised, or reinforcement learning.

- Training Data: No pizza is complete without cheese, and in the AI pizza, that’s the training data. It’s the essential component that melts and binds everything together, providing the model with the information it needs to learn.

- Model: Finally, the toppings represent the model itself. Each topping (feature) adds a unique taste (prediction capability), and the combination of them defines the overall flavor profile (performance) of the AI model. Depending on the option and recipe taken, the model can be a file (pickle, onnx often in a Docker container) or an API.

Machine Learning

In “classical” Machine Learning the Data Scientist is responsible to select the right algorithms, do data prep and cleaning, do feature engineering and model selection. Comparable to a cook creating a pizza from scratch. The Data Scientist has full flexibility in tools, libraries and platforms. To not have to reinvent the pizza-oven the use of Azure Machine Learning, Azure Databricks or the Data Science profile in Microsoft Fabric.

A good methodology for this recipe is the Team Data Science Process.

Custom Azure AI services

Azure AI offers a suite of customizable services that extend the capabilities of pre-trained models, allowing you to tailor them with your specific data. This personalization ensures that the AI services are more aligned with your unique requirements and can perform with greater accuracy in your particular context.

- Azure AI Custom Speech: Fine-tune speech recognition models to understand industry-specific terminology or accents, enhancing their ability to recognize speech in various environments and scenarios.

- Custom Vision: Adapt image classification and object detection models to recognize niche objects or patterns specific to your business, improving the model’s precision and reliability.

- Custom Translator: Train translation models on your organizational documents to capture the nuances of your industry’s jargon, ensuring translations maintain the intended meaning and context.

These services build upon the robust foundation of Microsoft’s base models (see below), which have been developed using extensive datasets to cover a wide range of scenarios. By leveraging these customizable options, you can achieve a more bespoke AI solution that fits seamlessly into your operational workflow.

Azure AI services

Azure AI services are powerful AI models, trained on very large datasets. Azure AI offers a variety of services that cater to different aspects of artificial intelligence. Here are some specific examples:

- Azure AI Vision: This service provides capabilities such as optical character recognition (OCR), image analysis, face detection, and spatial analysis.

- Azure AI Speech: Offers speech-to-text, text-to-speech, translation, and speaker recognition functionalities.

- Azure AI Language: Helps build applications with advanced natural language understanding capabilities.

- Azure AI Search: Enhances mobile and web apps with AI-powered cloud search features.

- Azure AI Content Safety: Detects unwanted content to maintain content quality and safety.

- Azure AI Translator: Utilizes AI-powered technology to translate more than 100 languages and dialects.

- Azure AI Document Intelligence: Turns documents into intelligent, data-driven solutions.

- Video Indexer: Extract actionable insights from your videos.

These services are designed to help developers and organizations rapidly create intelligent applications that are market-ready and responsible. They can be accessed through REST APIs and client library SDKs in popular development languages, making them highly accessible for integration into various projects and workflows.

Large Language Models (LLMs)

Large Language Models (LLMs) like OpenAI’s GPT-4 represent a quantum leap in the field of artificial intelligence. These colossal models are the result of training on enormous datasets encompassing a wide swath of human knowledge, powered by substantial computational resources. GPT-4, for instance, is a multimodal model capable of processing both text and image inputs to generate text outputs.

- Vast Knowledge Base: LLMs are trained on datasets that include a diverse range of topics, languages, and formats, providing them with a broad understanding of human language and context.

- Advanced Comprehension: With the ability to process and generate human-like text, LLMs can perform tasks that require understanding of nuance, sarcasm, and cultural references.

- Multimodal Abilities: Some LLMs, like GPT-4, go beyond text to interpret and generate content based on images, bridging the gap between visual and linguistic data1.

- High Cognition: LLMs have demonstrated human-level performance on various professional and academic benchmarks, including passing exams designed for humans with scores in the top percentile.

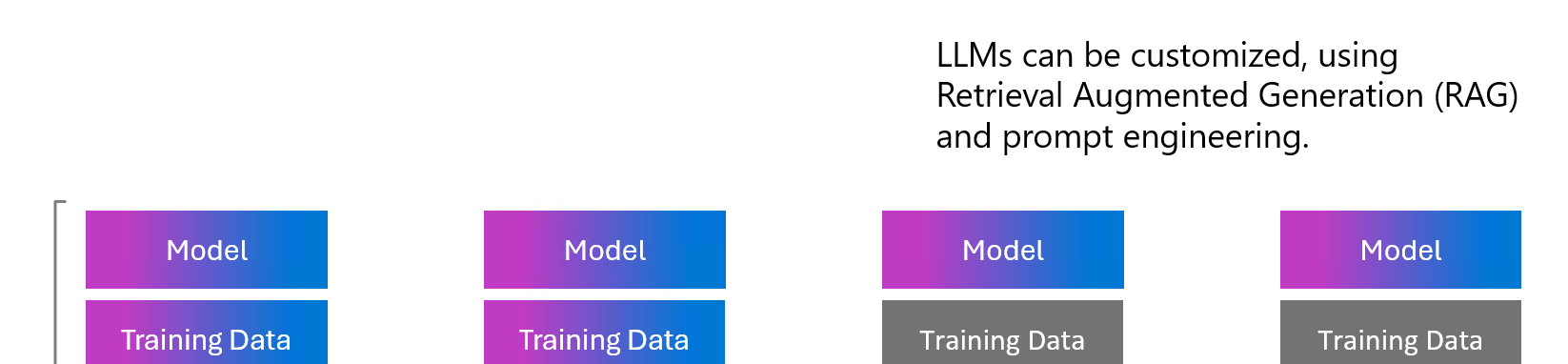

Customizing Large Language Models

Large Language Models are typically not fine-tuned with own training data after release, however there are ways to customize them using prompt engineering and Retrieval-Augmented Generation (RAG).

- Prompt Engineering: To customize large language models, one must become a skilled chef in prompt engineering. This involves carefully crafting prompts that guide the model to generate the desired output. It’s like telling the model, “Here’s what I’m looking for; now make it happen.”

- Retrieval-Augmented Generation (RAG): Another technique is Retrieval-Augmented Generation (RAG). Think of RAG as the secret spice blend that elevates a dish. It enhances a language model by allowing it to pull in external knowledge during the generation process, leading to more informed and accurate outputs.

Trustworthiness

To foster trustworthiness in machine learning and artificial intelligence, it is essential to establish robust feedback loops that not only refine algorithms for fairness, accuracy, and transparency but also adhere to ethical standards and incorporate diverse perspectives to mitigate biases.

Conclusion

The Pizza Model for AI serves up a comprehensive framework for understanding and deploying AI services. By considering each layer, from the infrastructure to the model, we can better decide which methodology fits your needs, making sure your next AI project is as delightful and satisfying as your favorite slice of pizza.